Which two authentication types are supported for single sign-on in Founda-tional Services?

Basic Authentication

OpenShift authentication

PublicKey

Enterprise SAML

Local User Registry

In IBM Cloud Pak for Integration (CP4I) v2021.2, Foundational Services provide authentication and access control mechanisms, including Single Sign-On (SSO) integration. The two supported authentication types for SSO are:

OpenShift Authentication

IBM Cloud Pak for Integration leverages OpenShift authentication to integrate with existing identity providers.

OpenShift authentication supports OAuth-based authentication, allowing users to sign in using an OpenShift identity provider, such as LDAP, OIDC, or SAML.

This method enables seamless user access without requiring additional login credentials.

Enterprise SAML (Security Assertion Markup Language)

SAML authentication allows integration with enterprise identity providers (IdPs) such as IBM Security Verify, Okta, Microsoft Active Directory Federation Services (ADFS), and other SAML 2.0-compatible IdPs.

It provides federated identity management for SSO across enterprise applications, ensuring secure access to Cloud Pak services.

A. Basic Authentication – Incorrect

Basic authentication (username and password) is not used for Single Sign-On (SSO). SSO mechanisms require identity federation through OpenID Connect (OIDC) or SAML.

C. PublicKey – Incorrect

PublicKey authentication (such as SSH key-based authentication) is used for system-level access, not for SSO in Foundational Services.

E. Local User Registry – Incorrect

While local user registries can store credentials, they do not provide SSO capabilities. SSO requires federated identity providers like OpenShift authentication or SAML-based IdPs.

Why the other options are incorrect:

IBM Cloud Pak Foundational Services Authentication Guide

OpenShift Authentication and Identity Providers

IBM Cloud Pak for Integration SSO Configuration

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

An administrator is installing the Cloud Pak for Integration operators via the CLI. They have created a YAML file describing the "ibm-cp-integration" subscription which will be installed in a new namespace.

Which resource needs to be added before the subscription can be applied?

An OperatorGroup resource.

The ibm-foundational-services operator and subscription

The platform-navigator operator and subscription.

The ibm-common-services namespace.

When installing IBM Cloud Pak for Integration (CP4I) operators via the CLI, the Operator Lifecycle Manager (OLM) requires an OperatorGroup resource before applying a Subscription.

OperatorGroup defines the scope (namespace) in which the operator will be deployed and managed.

It ensures that the operator has the necessary permissions to install and operate in the specified namespace.

Without an OperatorGroup, the subscription for ibm-cp-integration cannot be applied, and the installation will fail.

Create a new namespace (if not already created):

Why an OperatorGroup is Required:Steps for CLI Installation:oc create namespace cp4i-namespace

Create the OperatorGroup YAML (e.g., operatorgroup.yaml):

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

name: cp4i-operatorgroup

namespace: cp4i-namespace

spec:

targetNamespaces:

- cp4i-namespace

Apply it using:

oc apply -f operatorgroup.yaml

Apply the Subscription YAML for ibm-cp-integration once the OperatorGroup exists.

B. The ibm-foundational-services operator and subscription

While IBM Foundational Services is required for some Cloud Pak features, its absence does not prevent the creation of an operator subscription.

C. The platform-navigator operator and subscription

Platform Navigator is an optional component and is not required before installing the ibm-cp-integration subscription.

D. The ibm-common-services namespace

The IBM Common Services namespace is used for foundational services, but it is not required for defining an operator subscription in a new namespace.

Why Other Options Are Incorrect:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM Cloud Pak for Integration Operator Installation Guide

Red Hat OpenShift - Operator Lifecycle Manager (OLM) Documentation

IBM Common Services and Foundational Services Overview

Which statement describes the Aspera High Speed Transfer Server (HSTS) within IBM Cloud Pak for Integration?

HSTS allows an unlimited number of concurrent users to transfer files of up to 500GB at high speed using an Aspera client.

HSTS allows an unlimited number of concurrent users to transfer files of up to 100GB at high speed using an Aspera client.

HSTS allows an unlimited number of concurrent users to transfer files of up to 1TB at highs peed using an Aspera client.

HSTS allows an unlimited number of concurrent users to transfer files of any size at high speed using an Aspera client.

IBM Aspera High-Speed Transfer Server (HSTS) is a core component of IBM Cloud Pak for Integration (CP4I) that enables secure, high-speed file transfers over networks, regardless of file size, distance, or network conditions.

HSTS does not impose a file size limit, meaning users can transfer files of any size efficiently.

It uses IBM Aspera’s FASP (Fast and Secure Protocol) to achieve transfer speeds significantly faster than traditional TCP-based transfers, even over long distances or unreliable networks.

HSTS allows an unlimited number of concurrent users to transfer files using an Aspera client.

It ensures secure, encrypted, and efficient file transfers with features like bandwidth control and automatic retry in case of network failures.

A. HSTS allows an unlimited number of concurrent users to transfer files of up to 500GB at high speed using an Aspera client. (Incorrect)

Incorrect file size limit – HSTS supports files of any size without restrictions.

B. HSTS allows an unlimited number of concurrent users to transfer files of up to 100GB at high speed using an Aspera client. (Incorrect)

Incorrect file size limit – There is no 100GB limit in HSTS.

C. HSTS allows an unlimited number of concurrent users to transfer files of up to 1TB at high speed using an Aspera client. (Incorrect)

Incorrect file size limit – There is no 1TB limit in HSTS.

D. HSTS allows an unlimited number of concurrent users to transfer files of any size at high speed using an Aspera client. (Correct)

Correct answer – HSTS does not impose a file size limit, making it the best choice.

Analysis of the Options:

IBM Aspera High-Speed Transfer Server Documentation

IBM Cloud Pak for Integration - Aspera Overview

IBM Aspera FASP Technology

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

What is one method that can be used to uninstall IBM Cloud Pak for Integra-tion?

Uninstall.sh

Cloud Pak for Integration console

Operator Catalog

OpenShift console

Uninstalling IBM Cloud Pak for Integration (CP4I) v2021.2 requires removing the operators, instances, and related resources from the OpenShift cluster. One method to achieve this is through the OpenShift console, which provides a graphical interface for managing operators and deployments.

The OpenShift Web Console allows administrators to:

Navigate to Operators → Installed Operators and remove CP4I-related operators.

Delete all associated custom resources (CRs) and namespaces where CP4I was deployed.

Ensure that all PVCs (Persistent Volume Claims) and secrets associated with CP4I are also deleted.

This is an officially supported method for uninstalling CP4I in OpenShift environments.

Why Option D (OpenShift Console) is Correct:

A. Uninstall.sh → ❌ Incorrect

There is no official Uninstall.sh script provided by IBM for CP4I removal.

IBM’s documentation recommends manual removal through OpenShift.

B. Cloud Pak for Integration console → ❌ Incorrect

The CP4I console is used for managing integration components but does not provide an option to uninstall CP4I itself.

C. Operator Catalog → ❌ Incorrect

The Operator Catalog lists available operators but does not handle uninstallation.

Operators need to be manually removed via the OpenShift Console or CLI.

Explanation of Incorrect Answers:

Uninstalling IBM Cloud Pak for Integration

OpenShift Web Console - Removing Installed Operators

Best Practices for Uninstalling Cloud Pak on OpenShift

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

What are the two custom resources provided by IBM Licensing Operator?

IBM License Collector

IBM License Service Reporter

IBM License Viewer

IBM License Service

IBM License Reporting

The IBM Licensing Operator is responsible for managing and tracking IBM software license consumption in OpenShift and Kubernetes environments. It provides two key Custom Resources (CRs) to facilitate license tracking, reporting, and compliance in IBM Cloud Pak deployments:

IBM License Collector (IBMLicenseCollector)

This custom resource is responsible for collecting license usage data from IBM Cloud Pak components and aggregating the data for reporting.

It gathers information from various IBM products deployed within the cluster, ensuring that license consumption is tracked accurately.

IBM License Service (IBMLicenseService)

This custom resource provides real-time license tracking and metering for IBM software running in a containerized environment.

It is the core service that allows administrators to query and verify license usage.

The IBM License Service ensures compliance with IBM Cloud Pak licensing requirements and integrates with the IBM License Service Reporter for extended reporting capabilities.

B. IBM License Service Reporter – Incorrect

While IBM License Service Reporter exists as an additional reporting tool, it is not a custom resource provided directly by the IBM Licensing Operator. Instead, it is a component that enhances license reporting outside the cluster.

C. IBM License Viewer – Incorrect

No such CR exists. IBM License information can be viewed through OpenShift or CLI, but there is no "License Viewer" CR.

E. IBM License Reporting – Incorrect

While reporting is a function of IBM License Service, there is no custom resource named "IBM License Reporting."

Why the other options are incorrect:

IBM Licensing Service Documentation

IBM Cloud Pak Licensing Overview

OpenShift and IBM License Service Integration

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Select all that apply

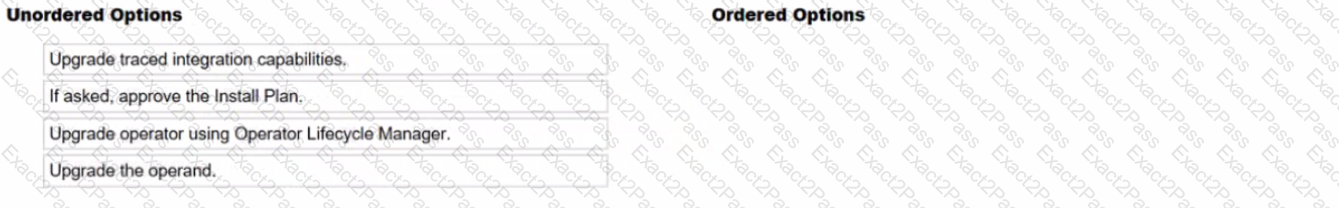

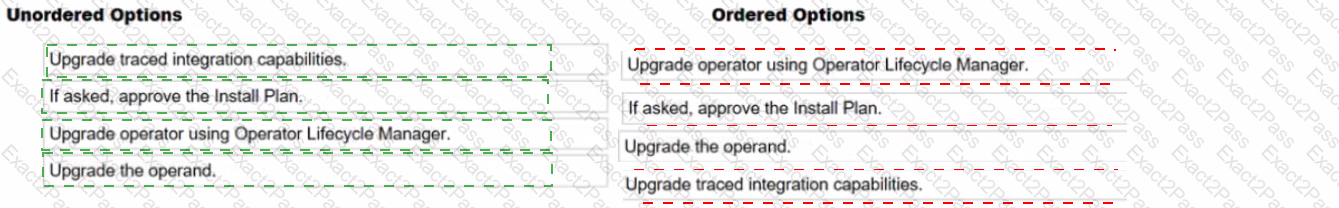

What is the correct order of the Operations Dashboard upgrade?

Upgrading the operator

If asked, approve the install plan

Upgrading the operand

Upgrading the traced integration capabilities

1️⃣ Upgrade operator using Operator Lifecycle Manager.

The Operator Lifecycle Manager (OLM) manages the upgrade of the Operations Dashboard operator in OpenShift.

This ensures that the latest version is available for managing operands.

2️⃣ If asked, approve the Install Plan.

Some installations require manual approval of the Install Plan to proceed with the operator upgrade.

If configured for automatic updates, this step may not be required.

3️⃣ Upgrade the operand.

Once the operator is upgraded, the operand (Operations Dashboard instance) needs to be updated to the latest version.

This step ensures that the upgraded operator manages the most recent operand version.

4️⃣ Upgrade traced integration capabilities.

Finally, upgrade any traced integration capabilities that depend on the Operations Dashboard.

This step ensures compatibility and full functionality with the updated components.

In IBM Cloud Pak for Integration (CP4I) v2021.2, the Operations Dashboard provides tracing and monitoring for integration capabilities. The correct upgrade sequence ensures a smooth transition with minimal downtime:

Upgrade the Operator using OLM – The Operator manages operands and must be upgraded first.

Approve the Install Plan (if required) – Some operator updates require manual approval before proceeding.

Upgrade the Operand – The actual Operations Dashboard component is upgraded after the operator.

Upgrade Traced Integration Capabilities – Ensures all monitored services are compatible with the new Operations Dashboard version.

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Upgrading Operators using Operator Lifecycle Manager (OLM)

IBM Cloud Pak for Integration Operations Dashboard

Best Practices for Upgrading CP4I Components

For manually managed upgrades, what is one way to upgrade the Automation As-sets (formerly known as Asset Repository) CR?

Use the OpenShift web console to edit the YAML definition of the Asset Re-pository operand of the IBM Automation foundation assets operator.

In OpenShift web console, navigate to the OperatorHub and edit the Automa-tion foundation assets definition.

Open the terminal window and run "oc upgrade ..." command,

Use the OpenShift web console to edit the YAML definition of the IBM Auto-mation foundation assets operator.

In IBM Cloud Pak for Integration (CP4I) v2021.2, the Automation Assets (formerly known as Asset Repository) is managed through the IBM Automation Foundation Assets Operator. When manually upgrading Automation Assets, you need to update the Custom Resource (CR) associated with the Asset Repository.

The correct approach to manually upgrading the Automation Assets CR is to:

Navigate to the OpenShift Web Console.

Go to Operators → Installed Operators.

Find and select IBM Automation Foundation Assets Operator.

Locate the Asset Repository operand managed by this operator.

Edit the YAML definition of the Asset Repository CR to reflect the new version or required configuration changes.

Save the changes, which will trigger the update process.

This approach ensures that the Automation Assets component is upgraded correctly without disrupting the overall IBM Cloud Pak for Integration environment.

B. In OpenShift web console, navigate to the OperatorHub and edit the Automation foundation assets definition.

The OperatorHub is used for installing and subscribing to operators but does not provide direct access to modify Custom Resources (CRs) related to operands.

C. Open the terminal window and run "oc upgrade ..." command.

There is no oc upgrade command in OpenShift. Upgrades in OpenShift are typically managed through CR updates or Operator Lifecycle Manager (OLM).

D. Use the OpenShift web console to edit the YAML definition of the IBM Automation foundation assets operator.

Editing the operator’s YAML would affect the operator itself, not the Asset Repository operand, which is what needs to be upgraded.

Why Other Options Are Incorrect:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM Cloud Pak for Integration Knowledge Center

IBM Automation Foundation Assets Documentation

OpenShift Operator Lifecycle Manager (OLM) Guide

What are two ways an Aspera HSTS Instance can be created?

Foundational Services Dashboard

OpenShift console

Platform Navigator

IBM Aspera HSTS Installer

Terraform

IBM Aspera High-Speed Transfer Server (HSTS) is a key component of IBM Cloud Pak for Integration (CP4I) that enables secure, high-speed data transfers. There are two primary methods to create an Aspera HSTS instance in CP4I v2021.2:

OpenShift Console (Option B - Correct):

Aspera HSTS can be deployed within an OpenShift cluster using the OpenShift Console.

Administrators can deploy Aspera HSTS by creating an instance from the IBM Aspera HSTS operator, which is available through the OpenShift OperatorHub.

The deployment is managed using Kubernetes custom resources (CRs) and YAML configurations.

IBM Aspera HSTS Installer (Option D - Correct):

IBM provides an installer for setting up an Aspera HSTS instance on supported platforms.

This installer automates the process of configuring the required services and dependencies.

It is commonly used for standalone or non-OpenShift deployments.

Analysis of Other Options:

Option A (Foundational Services Dashboard) - Incorrect:

The Foundational Services Dashboard is used for managing IBM Cloud Pak foundational services like identity and access management but does not provide direct deployment of Aspera HSTS.

Option C (Platform Navigator) - Incorrect:

Platform Navigator is used to manage cloud-native integrations, but it does not directly create Aspera HSTS instances. Instead, it can be used to access and manage the Aspera HSTS services after deployment.

Option E (Terraform) - Incorrect:

While Terraform can be used to automate infrastructure provisioning, IBM does not provide an official Terraform module for directly creating Aspera HSTS instances in CP4I v2021.2.

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM Documentation: Deploying Aspera HSTS on OpenShift

IBM Aspera Knowledge Center: Aspera HSTS Installation Guide

IBM Redbooks: IBM Cloud Pak for Integration Deployment Guide

Which statement is true if multiple instances of Aspera HSTS exist in a clus-ter?

Each UDP port must be unique.

UDP and TCP ports have to be the same.

Each TCP port must be unique.

UDP ports must be the same.

In IBM Aspera High-Speed Transfer Server (HSTS), UDP ports are crucial for enabling high-speed data transfers. When multiple instances of Aspera HSTS exist in a cluster, each instance must be assigned a unique UDP port to avoid conflicts and ensure proper traffic routing.

Aspera HSTS relies on UDP for high-speed file transfers (as opposed to TCP, which is typically used for control and session management).

If multiple HSTS instances share the same UDP port, packet collisions and routing issues can occur.

Ensuring unique UDP ports across instances allows for proper load balancing and optimal performance.

B. UDP and TCP ports have to be the same.

Incorrect, because UDP and TCP serve different purposes in Aspera HSTS.

TCP is used for session initialization and control, while UDP is used for actual data transfer.

C. Each TCP port must be unique.

Incorrect, because TCP ports do not necessarily need to be unique across multiple instances, depending on the deployment.

TCP ports can be shared if proper load balancing and routing are configured.

D. UDP ports must be the same.

Incorrect, because using the same UDP port for multiple instances causes conflicts, leading to failed transfers or degraded performance.

Key Considerations for Aspera HSTS in a Cluster:Why Other Options Are Incorrect:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM Aspera HSTS Configuration Guide

IBM Cloud Pak for Integration - Aspera Setup

IBM Aspera High-Speed Transfer Overview

Which of the following would contain mqsc commands for queue definitions to be executed when new MQ containers are deployed?

MORegistry

CCDTJSON

Operatorlmage

ConfigMap

In IBM Cloud Pak for Integration (CP4I) v2021.2, when deploying IBM MQ containers in OpenShift, queue definitions and other MQSC (MQ Script Command) commands need to be provided to configure the MQ environment dynamically. This is typically done using a Kubernetes ConfigMap, which allows administrators to define and inject configuration files, including MQSC scripts, into the containerized MQ instance at runtime.

A ConfigMap in OpenShift or Kubernetes is used to store configuration data as key-value pairs or files.

For IBM MQ, a ConfigMap can include an MQSC script that contains queue definitions, channel settings, and other MQ configurations.

When a new MQ container is deployed, the ConfigMap is mounted into the container, and the MQSC commands are executed to set up the queues.

Why is ConfigMap the Correct Answer?Example Usage:A sample ConfigMap containing MQSC commands for queue definitions may look like this:

apiVersion: v1

kind: ConfigMap

metadata:

name: my-mq-config

data:

10-create-queues.mqsc: |

DEFINE QLOCAL('MY.QUEUE') REPLACE

DEFINE QLOCAL('ANOTHER.QUEUE') REPLACE

This ConfigMap can then be referenced in the MQ Queue Manager’s deployment configuration to ensure that the queue definitions are automatically executed when the MQ container starts.

A. MORegistry - Incorrect

The MORegistry is not a component used for queue definitions. Instead, it relates to Managed Objects in certain IBM middleware configurations.

B. CCDTJSON - Incorrect

CCDTJSON refers to Client Channel Definition Table (CCDT) in JSON format, which is used for defining MQ client connections rather than queue definitions.

C. OperatorImage - Incorrect

The OperatorImage contains the IBM MQ Operator, which manages the lifecycle of MQ instances in OpenShift, but it does not store queue definitions or execute MQSC commands.

IBM Documentation: Configuring IBM MQ with ConfigMaps

IBM MQ Knowledge Center: Using MQSC commands in Kubernetes ConfigMaps

IBM Redbooks: IBM Cloud Pak for Integration Deployment Guide

Analysis of Other Options:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Starling with Common Services 3.6, which two monitoring service modes are available?

OCP Monitoring

OpenShift Common Monitoring

C CP4I Monitoring

CS Monitoring

Grafana Monitoring

Starting with IBM Cloud Pak for Integration (CP4I) v2021.2, which uses IBM Common Services 3.6, there are two monitoring service modes available for tracking system health and performance:

OCP Monitoring (OpenShift Container Platform Monitoring) – This is the native OpenShift monitoring system that provides observability for the entire cluster, including nodes, pods, and application workloads. It uses Prometheus for metrics collection and Grafana for visualization.

CS Monitoring (Common Services Monitoring) – This is the IBM Cloud Pak for Integration-specific monitoring service, which provides additional observability features specifically for IBM Cloud Pak components. It integrates with OpenShift but focuses on Cloud Pak services and applications.

Option B (OpenShift Common Monitoring) is incorrect: While OpenShift has a Common Monitoring Stack, it is not a specific mode for IBM CP4I monitoring services. Instead, it is a subset of OCP Monitoring used for monitoring the OpenShift control plane.

Option C (CP4I Monitoring) is incorrect: There is no separate "CP4I Monitoring" service mode. CP4I relies on OpenShift's monitoring framework and IBM Common Services monitoring.

Option E (Grafana Monitoring) is incorrect: Grafana is a visualization tool, not a standalone monitoring service mode. It is used in conjunction with Prometheus in both OCP Monitoring and CS Monitoring.

IBM Cloud Pak for Integration Monitoring Documentation

IBM Common Services Monitoring Overview

OpenShift Monitoring Stack – Red Hat Documentation

Why the other options are incorrect:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

In the Cloud Pak for Integration platform, which two roles can be used to connect to an LDAP Directory?

Cloud Pak administrator

Cluster administrator

Cluster manager

Cloud Pak root

Cloud Pak user

In IBM Cloud Pak for Integration (CP4I), Lightweight Directory Access Protocol (LDAP) integration allows centralized authentication and user management. To configure LDAP and establish a connection, users must have sufficient privileges within the Cloud Pak environment.

The two roles that can connect and configure LDAP are:

Cloud Pak administrator (A) – ✅ Correct

This role has full administrative access to Cloud Pak services, including configuring authentication and integrating with external directories like LDAP.

A Cloud Pak administrator can define identity providers and manage user access settings.

Cluster administrator (B) – ✅ Correct

The Cluster administrator role has control over the OpenShift cluster, including managing security settings, authentication providers, and infrastructure configurations.

Since LDAP integration requires changes at the cluster level, this role is also capable of configuring LDAP connections.

C. Cluster manager (Incorrect)

There is no predefined "Cluster manager" role in Cloud Pak for Integration that specifically handles LDAP integration.

D. Cloud Pak root (Incorrect)

No official "Cloud Pak root" role exists. Root-level access typically refers to system-level privileges but is not a designated role in Cloud Pak.

D. Cloud Pak user (Incorrect)

Cloud Pak users have limited permissions and can only access assigned services; they cannot configure LDAP or authentication settings.

Analysis of Incorrect Options:

IBM Cloud Pak for Integration - Managing Authentication and LDAP

OpenShift Cluster Administrator Role and Access Control

IBM Cloud Pak - Configuring External Identity Providers

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

When using the Platform Navigator, what permission is required to add users and user groups?

root

Super-user

Administrator

User

In IBM Cloud Pak for Integration (CP4I) v2021.2, the Platform Navigator is the central UI for managing integration capabilities, including user and access control. To add users and user groups, the required permission level is Administrator.

User Management Capabilities:

The Administrator role in Platform Navigator has full access to user and group management functions, including:

Adding new users

Assigning roles

Managing access policies

RBAC (Role-Based Access Control) Enforcement:

CP4I enforces RBAC to restrict actions based on roles.

Only Administrators can modify user access, ensuring security compliance.

Access Control via OpenShift and IAM Integration:

User management in CP4I integrates with IBM Cloud IAM or OpenShift User Management.

The Administrator role ensures correct permissions for authentication and authorization.

Why is "Administrator" the Correct Answer?

Why Not the Other Options?Option

Reason for Exclusion

A. root

"root" is a Linux system user and not a role in Platform Navigator. CP4I does not grant UI-based root access.

B. Super-user

No predefined "Super-user" role exists in CP4I. If referring to an elevated user, it still does not match the Administrator role in Platform Navigator.

D. User

Regular "User" roles have view-only or limited permissions and cannot manage users or groups.

Thus, the Administrator role is the correct choice for adding users and user groups in Platform Navigator.

IBM Cloud Pak for Integration - Platform Navigator Overview

Managing Users in Platform Navigator

Role-Based Access Control in CP4I

OpenShift User Management and Authentication

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which publicly available document lists known Cloud Pak for Integration problems and limitations?

IBM Cloud Pak for Integration - Q&A

IBM Cloud Pak for Integration - Known Limitations

IBM Cloud Pak for Integration - Known Problems

IBM Cloud Pak for Integration - Latest News

IBM provides a publicly available document that lists the known issues, limitations, and restrictions for each release of IBM Cloud Pak for Integration (CP4I). This document is called "IBM Cloud Pak for Integration - Known Limitations."

It details any functional restrictions, unresolved issues, and workarounds applicable to the current and previous versions of CP4I.

This document helps administrators and developers understand current limitations before deploying or upgrading CP4I components.

It is updated regularly as IBM identifies new issues or resolves existing ones.

A. IBM Cloud Pak for Integration - Q&A (Incorrect)

A Q&A section typically contains frequently asked questions (FAQs) but does not specifically focus on known issues or limitations.

C. IBM Cloud Pak for Integration - Known Problems (Incorrect)

IBM does not maintain a document explicitly titled "Known Problems." Instead, known issues are included under "Known Limitations."

D. IBM Cloud Pak for Integration - Latest News (Incorrect)

The "Latest News" section typically covers new features, updates, and release announcements, but it does not provide a dedicated list of limitations or unresolved issues.

Analysis of Incorrect Options:

IBM Cloud Pak for Integration - Known Limitations

IBM Cloud Pak for Integration Documentation

IBM Support - Fixes and Known Issues

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

What is the minimum Red Hat OpenShift version for Cloud Pak for Integration V2021.2?

4.7.4

4.6.8

4.7.4

4.6.2

IBM Cloud Pak for Integration (CP4I) v2021.2 is designed to run on Red Hat OpenShift Container Platform (OCP). Each version of CP4I has a minimum required OpenShift version to ensure compatibility, performance, and security.

For Cloud Pak for Integration v2021.2, the minimum required OpenShift version is 4.7.4.

Compatibility: CP4I components, including IBM MQ, API Connect, App Connect, and Event Streams, require specific OpenShift versions to function properly.

Security & Stability: Newer OpenShift versions include critical security updates and performance improvements essential for enterprise deployments.

Operator Lifecycle Management (OLM): CP4I uses OpenShift Operators, and the correct OpenShift version ensures proper installation and lifecycle management.

Minimum required OpenShift version: 4.7.4

Recommended OpenShift version: 4.8 or later

Key Considerations for OpenShift Version Requirements:IBM’s Official Minimum OpenShift Version Requirements for CP4I v2021.2:

IBM officially requires at least OpenShift 4.7.4 for deploying CP4I v2021.2.

OpenShift 4.6.x versions are not supported for CP4I v2021.2.

OpenShift 4.7.4 is the first fully supported version that meets IBM's compatibility requirements.

Why Answer A (4.7.4) is Correct?

B. 4.6.8 → Incorrect

OpenShift 4.6.x is not supported for CP4I v2021.2.

IBM Cloud Pak for Integration v2021.1 supported OpenShift 4.6, but v2021.2 requires 4.7.4 or later.

C. 4.7.4 → Correct

This is the minimum required OpenShift version for CP4I v2021.2.

D. 4.6.2 → Incorrect

OpenShift 4.6.2 is outdated and does not meet the minimum version requirement for CP4I v2021.2.

Explanation of Incorrect Answers:

IBM Cloud Pak for Integration v2021.2 System Requirements

Red Hat OpenShift Version Support Matrix

IBM Cloud Pak for Integration OpenShift Deployment Guide

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

An account lockout policy can be created when setting up an LDAP server for the Cloud Pak for Integration platform. What is this policy used for?

It warns the administrator if multiple login attempts fail.

It prompts the user to change the password.

It deletes the user account.

It restricts access to the account if multiple login attempts fail.

In IBM Cloud Pak for Integration (CP4I) v2021.2, when integrating LDAP (Lightweight Directory Access Protocol) for authentication, an account lockout policy can be configured to enhance security.

The account lockout policy is designed to prevent brute-force attacks by temporarily or permanently restricting user access after multiple failed login attempts.

If a user enters incorrect credentials multiple times, the account is locked based on the configured policy.

The lockout can be temporary (auto-unlock after a period) or permanent (admin intervention required).

This prevents attackers from guessing passwords through repeated login attempts.

The policy's main function is to restrict access after repeated failed attempts, ensuring security.

It helps mitigate brute-force attacks and unauthorized access.

LDAP enforces the lockout rules based on the organization's security settings.

How the Account Lockout Policy Works:Why Answer D is Correct?

A. It warns the administrator if multiple login attempts fail. → Incorrect

While administrators may receive alerts, the primary function of the lockout policy is to restrict access, not just warn the admin.

B. It prompts the user to change the password. → Incorrect

An account lockout prevents login rather than prompting a password change.

Password change prompts usually happen for expired passwords, not failed logins.

C. It deletes the user account. → Incorrect

Lockout disables access but does not delete the user account.

Explanation of Incorrect Answers:

IBM Cloud Pak for Integration Security & LDAP Configuration

IBM Cloud Pak Foundational Services - Authentication & User Management

IBM Cloud Pak for Integration - Managing User Access

IBM LDAP Account Lockout Policy Guide

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

When using IBM Cloud Pak for Integration and deploying the DataPower Gateway service, which statement is true?

Only the datapower-cp4i image can be deployed.

A selected list of add-on modules can be enabled on DataPower Gateway.

This image deployment brings all the functionality of DataPower as it runs with root permissions.

The datapower-cp4i image will be downloaded from the dockerhub enterprise account of IBM.

When deploying IBM DataPower Gateway as part of IBM Cloud Pak for Integration (CP4I) v2021.2, administrators can enable a selected list of add-on modules based on their requirements. This allows customization and optimization of the deployment by enabling only the necessary features.

IBM DataPower Gateway deployed in Cloud Pak for Integration is a containerized version that supports modular configurations.

Administrators can enable or disable add-on modules to optimize resource utilization and security.

Some of these modules include:

API Gateway

XML Processing

MQ Connectivity

Security Policies

Why Option B is Correct:This flexibility helps in reducing overhead and ensuring that only the necessary capabilities are deployed.

A. Only the datapower-cp4i image can be deployed. → Incorrect

While datapower-cp4i is the primary image used within Cloud Pak for Integration, other variations of DataPower can also be deployed outside CP4I (e.g., standalone DataPower Gateway).

C. This image deployment brings all the functionality of DataPower as it runs with root permissions. → Incorrect

The DataPower container runs as a non-root user for security reasons.

Not all functionalities available in the bare-metal or VM-based DataPower appliance are enabled by default in the containerized version.

D. The datapower-cp4i image will be downloaded from the dockerhub enterprise account of IBM. → Incorrect

IBM does not use DockerHub for distributing CP4I container images.

Instead, DataPower images are pulled from the IBM Entitled Registry (cp.icr.io), which requires an IBM Entitlement Key for access.

Explanation of Incorrect Answers:

IBM Cloud Pak for Integration - Deploying DataPower Gateway

IBM DataPower Gateway Container Deployment Guide

IBM Entitled Registry - Pulling CP4I Images

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which diagnostic information must be gathered and provided to IBM Support for troubleshooting the Cloud Pak for Integration instance?

Standard OpenShift Container Platform logs.

Platform Navigator event logs.

Cloud Pak For Integration activity logs.

Integration tracing activity reports.

When troubleshooting an IBM Cloud Pak for Integration (CP4I) v2021.2 instance, IBM Support requires diagnostic data that provides insights into the system’s performance, errors, and failures. The most critical diagnostic information comes from the Standard OpenShift Container Platform logs because:

CP4I runs on OpenShift, and its components are deployed as Kubernetes pods, meaning logs from OpenShift provide essential insights into infrastructure-level and application-level issues.

The OpenShift logs include:

Pod logs (oc logs

Event logs (oc get events), which provide details about errors, scheduling issues, or failed deployments.

Node and system logs, which help diagnose resource exhaustion, networking issues, or storage failures.

B. Platform Navigator event logs → Incorrect

While Platform Navigator manages CP4I services, its event logs focus mainly on UI-related issues and do not provide deep troubleshooting data needed for IBM Support.

C. Cloud Pak For Integration activity logs → Incorrect

CP4I activity logs include component-specific logs but do not cover the underlying OpenShift platform or container-level issues, which are crucial for troubleshooting.

D. Integration tracing activity reports → Incorrect

Integration tracing focuses on tracking API and message flows but is not sufficient for diagnosing broader CP4I system failures or deployment issues.

Explanation of Incorrect Answers:

IBM Cloud Pak for Integration Troubleshooting Guide

OpenShift Log Collection for Support

IBM MustGather for Cloud Pak for Integration

Red Hat OpenShift Logging and Monitoring

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which statement is true regarding an upgrade of App Connect Operators?

The App Connect Operator can be upgraded automatically when a new compatible version is available.

The setting for automatic upgrades can only be specified at the time the App Connect Operator is installed.

Once the App Connect Operator is installed the approval strategy cannot be modified.

There is no option to require manual approval for updating the App Connect Operator.

In IBM Cloud Pak for Integration (CP4I), operators—including the App Connect Operator—are managed through Operator Lifecycle Manager (OLM) in Red Hat OpenShift. OLM provides two upgrade approval strategies:

Automatic: The operator is upgraded as soon as a new compatible version becomes available.

Manual: An administrator must manually approve the upgrade.

The App Connect Operator supports automatic upgrades when configured with the Automatic approval strategy during installation or later through OperatorHub settings. If this setting is enabled, OpenShift will detect new compatible versions and upgrade the operator without requiring manual intervention.

B. The setting for automatic upgrades can only be specified at the time the App Connect Operator is installed.

Incorrect, because the approval strategy can be modified later in OpenShift’s OperatorHub or via CLI.

C. Once the App Connect Operator is installed, the approval strategy cannot be modified.

Incorrect, because OpenShift allows administrators to change the approval strategy at any time after installation.

D. There is no option to require manual approval for updating the App Connect Operator.

Incorrect, because OLM provides both manual and automatic approval options. If manual approval is set, the administrator must manually approve each upgrade.

Why Other Options Are Incorrect:IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM App Connect Operator Upgrade Process

OpenShift Operator Lifecycle Manager (OLM) Documentation

IBM Cloud Pak for Integration Operator Management

An administrator is deploying an MQ topology, and is checking that their Cloud Pak (or Integration (CP4I) license entitlement is covered. The administrator has 100 VPCs of CP4I licenses to use. The administrator wishes to deploy an MQ topology using the NativeHA feature.

Which statement is true?

No licenses, because only RDQM is supported on CP4I.

License entitlement is required for all of the HA replicas of the NativeHA MQ, not only the active MQ.

A different license from the standard CP4I license must be purchased from IBM to use the NativeHA feature.

The administrator can use their pool of CP4I licenses.

In IBM Cloud Pak for Integration (CP4I), IBM MQ Native High Availability (NativeHA) is a feature that enables automated failover and redundancy by maintaining multiple replicas of an MQ queue manager.

When using NativeHA, licensing in CP4I is calculated based on the total number of VPCs (Virtual Processor Cores) consumed by all MQ instances, including both active and standby replicas.

IBM MQ NativeHA uses a multi-replica setup, meaning there are multiple queue manager instances running simultaneously for redundancy.

Licensing in CP4I is based on the total CPU consumption of all running MQ replicas, not just the active instance.

Therefore, the administrator must ensure that all HA replicas are accounted for in their license entitlement.

Why Option B is Correct:

A. No licenses, because only RDQM is supported on CP4I. (Incorrect)

IBM MQ NativeHA is fully supported on CP4I alongside RDQM (Replicated Data Queue Manager). NativeHA is actually preferred over RDQM in containerized OpenShift environments.

C. A different license from the standard CP4I license must be purchased from IBM to use the NativeHA feature. (Incorrect)

No separate license is required for NativeHA – it is covered under the CP4I licensing model.

D. The administrator can use their pool of CP4I licenses. (Incorrect)

Partially correct but incomplete – while the administrator can use their CP4I licenses, they must ensure that all HA replicas are included in the license calculation, not just the active instance.

Analysis of the Incorrect Options:

IBM MQ Native High Availability Licensing

IBM Cloud Pak for Integration Licensing Guide

IBM MQ on CP4I - Capacity Planning and Licensing

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Given the high availability requirements for a Cloud Pak for Integration deployment, which two components require a quorum for high availability?

Multi-instance Queue Manager

API Management (API Connect)

Application Integration (App Connect)

Event Gateway Service

Automation Assets

In IBM Cloud Pak for Integration (CP4I) v2021.2, ensuring high availability (HA) requires certain components to maintain a quorum. A quorum is a mechanism where a majority of nodes or instances must agree on a state to prevent split-brain scenarios and ensure consistency.

IBM MQ Multi-instance Queue Manager is designed for high availability.

It runs in an active-standby configuration where a shared storage is required, and a quorum ensures that failover occurs correctly.

If the primary queue manager fails, quorum logic ensures that another instance assumes control without data corruption.

API Connect operates in a distributed cluster architecture where multiple components (such as the API Manager, Analytics, and Gateway) work together.

A quorum is required to ensure consistency and avoid conflicts in API configurations across multiple instances.

API Connect uses MongoDB as its backend database, and MongoDB requires a replica set quorum for high availability and failover.

Why "Multi-instance Queue Manager" (A) Requires a Quorum?Why "API Management (API Connect)" (B) Requires a Quorum?

Why Not the Other Options?Option

Reason for Exclusion

C. Application Integration (App Connect)

While App Connect can be deployed in HA mode, it does not require a quorum. It uses Kubernetes scaling and load balancing instead.

D. Event Gateway Service

Event Gateway is stateless and relies on horizontal scaling rather than quorum-based HA.

E. Automation Assets

This component stores automation-related assets but does not require quorum for HA. It typically relies on persistent storage replication.

Thus, Multi-instance Queue Manager (IBM MQ) and API Management (API Connect) require quorum to ensure high availability in Cloud Pak for Integration.

IBM MQ Multi-instance Queue Manager HA

IBM API Connect High Availability and Quorum

CP4I High Availability Architecture

MongoDB Replica Set Quorum in API Connect

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which service receives audit data and collects application logs in Cloud Pak Foundational Services?

logging service

audit-syslog-service

systemd journal

fluentd service

In IBM Cloud Pak Foundational Services, the audit-syslog-service is responsible for receiving audit data and collecting application logs. This service ensures that security and compliance-related events are properly recorded and made available for analysis.

The audit-syslog-service is a key component of Cloud Pak's logging and monitoring framework, specifically designed to capture audit logs from various services.

It can forward logs to external SIEM (Security Information and Event Management) systems or centralized log collection tools for further analysis.

It helps organizations meet compliance and governance requirements by maintaining detailed audit trails.

Why is audit-syslog-service the correct answer?

A. logging service (Incorrect)

While Cloud Pak Foundational Services include a logging service, it is primarily for general application logging and does not specifically handle audit data collection.

C. systemd journal (Incorrect)

systemd journal is the default system log manager on Linux but is not the dedicated service for handling Cloud Pak audit logs.

D. fluentd service (Incorrect)

Fluentd is a log forwarding agent used for collecting and transporting logs, but it does not directly receive audit data in Cloud Pak Foundational Services. It can be used in combination with audit-syslog-service for log aggregation.

Analysis of the Incorrect Options:

IBM Cloud Pak Foundational Services - Audit Logging

IBM Cloud Pak for Integration Logging and Monitoring

Configuring Audit Log Forwarding in IBM Cloud Pak

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Assuming thai IBM Common Services are installed in the ibm-common-services namespace and the Cloud Pak for Integration is installed in the cp4i namespace, what is needed for the authentication to the License Service APIs?

A token available in ibm-licensing-token secret in the cp4i namespace.

A password available in platform-auth-idp-credentials in the ibm-common-services namespace.

A password available in ibm-entitlement-key key in the cp4i namespace.

A token available in ibm-licensing-token secret in the ibm-common-services namespace.

IBM Cloud Pak for Integration (CP4I) relies on IBM Common Services for authentication, licensing, and other foundational functionalities. The License Service API is a key component that enables the monitoring and reporting of software license usage across the cluster.

Authentication to the License Service APITo authenticate to the IBM License Service APIs, a token is required, which is stored in the ibm-licensing-token secret within the ibm-common-services namespace (where IBM Common Services are installed).

When Cloud Pak for Integration (installed in the cp4i namespace) needs to interact with the License Service API, it retrieves the authentication token from this secret in the ibm-common-services namespace.

The ibm-licensing-token secret is automatically created in the ibm-common-services namespace when the IBM License Service is deployed.

This token is required for authentication when querying licensing information via the License Service API.

Since IBM Common Services are installed in ibm-common-services, and the licensing service is part of these foundational services, authentication tokens are stored in this namespace rather than the cp4i namespace.

Why is Option D Correct?

Analysis of Other Options:Option

Correct/Incorrect

Reason

A. A token available in ibm-licensing-token secret in the cp4i namespace.

❌ Incorrect

The licensing token is stored in the ibm-common-services namespace, not in cp4i.

B. A password available in platform-auth-idp-credentials in the ibm-common-services namespace.

❌ Incorrect

This secret is related to authentication for the IBM Identity Provider (OIDC) and is not used for licensing authentication.

C. A password available in ibm-entitlement-key in the cp4i namespace.

❌ Incorrect

The ibm-entitlement-key is used for accessing IBM Container Registry to pull images, not for licensing authentication.

D. A token available in ibm-licensing-token secret in the ibm-common-services namespace.

✅ Correct

This is the correct secret that contains the required token for authentication to the License Service API.

IBM Documentation: IBM License Service Authentication and Tokens

IBM Knowledge Center: Managing License Service in OpenShift

IBM Redbooks: IBM Cloud Pak for Integration Deployment Guide

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

What is the License Service's frequency of refreshing data?

1 hour.

30 seconds.

5 minutes.

30 minutes.

In IBM Cloud Pak Foundational Services, the License Service is responsible for collecting, tracking, and reporting license usage data. It ensures compliance by monitoring the consumption of IBM Cloud Pak licenses across the environment.

The License Service refreshes its data every 5 minutes to keep the license usage information up to date.

This frequent update cycle helps organizations maintain accurate tracking of their entitlements and avoid non-compliance issues.

A. 1 hour (Incorrect)

The License Service updates its records more frequently than every hour to provide timely insights.

B. 30 seconds (Incorrect)

A refresh interval of 30 seconds would be too frequent for license tracking, leading to unnecessary overhead.

C. 5 minutes (Correct)

The IBM License Service refreshes its data every 5 minutes, ensuring real-time tracking without excessive system load.

D. 30 minutes (Incorrect)

A 30-minute refresh would delay the reporting of license usage, which is not the actual behavior of the License Service.

Analysis of the Options:

IBM License Service Overview

IBM Cloud Pak License Service Data Collection Interval

IBM Cloud Pak Compliance and License Reporting

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

What is the default time period for the data retrieved by the License Service?

90 days.

The full period from the deployment.

30 days.

60 days.

In IBM Cloud Pak for Integration (CP4I) v2021.2, the IBM License Service collects and retains license usage data for a default period of 90 days. This data is crucial for auditing and compliance, ensuring that software usage aligns with licensing agreements.

The IBM License Service continuously collects and stores licensing data.

By default, it retains data for 90 days before older data is automatically removed.

Users can query and retrieve usage reports from this 90-day period.

The License Service supports regulatory compliance by ensuring transparent tracking of software usage.

B. The full period from the deployment – Incorrect. The License Service does not retain data indefinitely; it follows a rolling 90-day retention policy.

C. 30 days – Incorrect. The default retention period is longer than 30 days.

D. 60 days – Incorrect. The default is 90 days, not 60.

IBM License Service Documentation

IBM Cloud Pak for Integration v2021.2 – Licensing Guide

IBM Support – License Service Data Retention Policy

Key Details About the IBM License Service Data Retention:Why Not the Other Options?IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References

Which command will attach the shell to a running container?

run….

attach….

connect...

shell…

In IBM Cloud Pak for Integration (CP4I) v2021.2, which runs on Red Hat OpenShift, administrators often need to interact with running containers for troubleshooting, debugging, or configuration changes. The correct command to attach the shell to a running container is:

oc attach

This command connects the user to the standard input (stdin), output (stdout), and error (stderr) streams of the specified container inside a pod.

Alternatively, for interactive shell access, administrators can use:

oc exec -it

or

oc exec -it

if the container supports Bash.

A. run → ❌ Incorrect

The oc run command creates a new pod rather than attaching to an existing running container.

C. connect → ❌ Incorrect

There is no oc connect command in OpenShift or Kubernetes for attaching to a container shell.

D. shell → ❌ Incorrect

OpenShift and Kubernetes do not have a shell command for connecting to a running container.

Instead, the oc exec command is used to start an interactive shell session inside a container.

Explanation of Incorrect Answers:

OpenShift CLI (oc) Command Reference

IBM Cloud Pak for Integration Troubleshooting Guide

Kubernetes attach vs exec Commands

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which storage type is supported with the App Connect Enterprise (ACE) Dash-board instance?

Ephemeral storage

Flash storage

File storage

Raw block storage

In IBM Cloud Pak for Integration (CP4I) v2021.2, App Connect Enterprise (ACE) Dashboard requires persistent storage to maintain configurations, logs, and runtime data. The supported storage type for the ACE Dashboard instance is file storage because:

It supports ReadWriteMany (RWX) access mode, allowing multiple pods to access shared data.

It ensures data persistence across restarts and upgrades, which is essential for managing ACE integrations.

It is compatible with NFS, IBM Spectrum Scale, and OpenShift Container Storage (OCS), all of which provide file system-based storage.

A. Ephemeral storage – Incorrect

Ephemeral storage is temporary and data is lost when the pod restarts or gets rescheduled.

ACE Dashboard needs persistent storage to retain configuration and logs.

B. Flash storage – Incorrect

Flash storage refers to SSD-based storage and is not specifically required for the ACE Dashboard.

While flash storage can be used for better performance, ACE requires file-based persistence, which is different from flash storage.

D. Raw block storage – Incorrect

Block storage is low-level storage that is used for databases and applications requiring high-performance IOPS.

ACE Dashboard needs a shared file system, which block storage does not provide.

Why the other options are incorrect:

IBM App Connect Enterprise (ACE) Storage Requirements

IBM Cloud Pak for Integration Persistent Storage Guide

OpenShift Persistent Volume Types

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

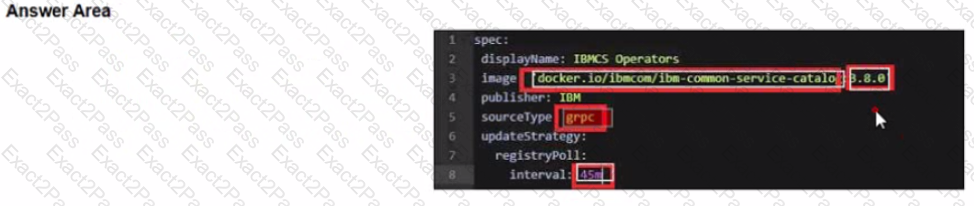

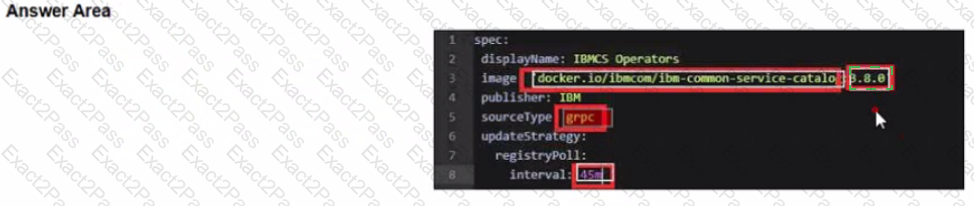

Before upgrading the Foundational Services installer version, the installer catalog source image must have the correct tag. To always use the latest catalog click on where the text 'latest' should be inserted into the image below?

Upgrading from version 3.4.x and 3.5.x to version 3.6.x

Before you upgrade the foundational services installer version, make sure that the installer catalog source image has the correct tag.

If, during installation, you had set the catalog source image tag as latest, you do not need to manually change the tag.

If, during installation, you had set the catalog source image tag to a specific version, you must update the tag with the version that you want to upgrade to. Or, you can change the tag to latest to automatically complete future upgrades to the most current version.

To update the tag, complete the following actions.

To update the catalog source image tag, run the following command.

oc edit catalogsource opencloud-operators -n openshift-marketplace

Update the image tag.

Change image tag to the specific version of 3.6.x. The 3.6.3 tag is used as an example here:

spec:

displayName: IBMCS Operators

image: 'docker.io/ibmcom/ibm-common-service-catalog:3.6.3'

publisher: IBM

sourceType: grpc

updateStrategy:

registryPoll:

interval: 45m

Change the image tag to latest to automatically upgrade to the most current version.

spec:

displayName: IBMCS Operators

image: 'icr.io/cpopen/ibm-common-service-catalog:latest'

publisher: IBM

sourceType: grpc

updateStrategy:

registryPoll:

interval: 45m

To check whether the image tag is successfully updated, run the following command:

oc get catalogsource opencloud-operators -n openshift-marketplace -o jsonpath='{.spec.image}{"\n"}{.status.connectionState.lastObservedState}'

The following sample output has the image tag and its status:

icr.io/cpopen/ibm-common-service-catalog:latest

READY%

https://www.ibm.com/docs/en/cpfs?topic=online-upgrading-foundational-services-from-operator-release

What is the outcome when the API Connect operator is installed at the cluster scope?

Automatic updates will be restricted by the approval strategy.

API Connect services will be deployed in the default namespace.

The operator installs in a production deployment profile.

The entire cluster effectively behaves as one large tenant.

When the API Connect operator is installed at the cluster scope, it means that the operator has permissions and visibility across the entire Kubernetes or OpenShift cluster, rather than being limited to a single namespace. This setup allows multiple namespaces to utilize the API Connect resources, effectively making the entire cluster behave as one large tenant.

Cluster-wide installation enables shared services across multiple namespaces, ensuring that API management is centralized.

Multi-tenancy behavior occurs because all API Connect components, such as the Gateway, Analytics, and Portal, can serve multiple teams or applications within the cluster.

Operator Lifecycle Manager (OLM) governs how the API Connect operator is deployed and managed across namespaces, reinforcing the unified behavior across the cluster.

IBM API Connect Operator Documentation

IBM Cloud Pak for Integration - Installing API Connect

IBM Redbook - Cloud Pak for Integration Architecture Guide

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

Which statement is true about enabling open tracing for API Connect?

Only APIs using API Gateway can be traced in the Operations Dashboard.

API debug data is made available in OpenShift cluster logging.

This feature is only available in non-production deployment profiles

Trace data can be viewed in Analytics dashboards

Open Tracing in IBM API Connect allows for distributed tracing of API calls across the system, helping administrators analyze performance bottlenecks and troubleshoot issues. However, this capability is specifically designed to work with APIs that utilize the API Gateway.

Option A (Correct Answer): IBM API Connect integrates with OpenTracing for API Gateway, allowing the tracing of API requests in the Operations Dashboard. This provides deep visibility into request flows and latencies.

Option B (Incorrect): API debug data is not directly made available in OpenShift cluster logging. Instead, API tracing data is captured using OpenTracing-compatible tools.

Option C (Incorrect): OpenTracing is available for all deployment profiles, including production, not just non-production environments.

Option D (Incorrect): Trace data is not directly visible in Analytics dashboards but rather in the Operations Dashboard where administrators can inspect API request traces.

IBM Cloud Pak for Integration (CP4I) v2021.2 Administration References:

IBM API Connect Documentation – OpenTracing

IBM Cloud Pak for Integration - API Gateway Tracing

IBM API Connect Operations Dashboard Guide