What are purposes for creating a storage integration? (Choose three.)

Control access to Snowflake data using a master encryption key that is maintained in the cloud provider’s key management service.

Store a generated identity and access management (IAM) entity for an external cloud provider regardless of the cloud provider that hosts the Snowflake account.

Support multiple external stages using one single Snowflake object.

Avoid supplying credentials when creating a stage or when loading or unloading data.

Create private VPC endpoints that allow direct, secure connectivity between VPCs without traversing the public internet.

Manage credentials from multiple cloud providers in one single Snowflake object.

The purpose of creating a storage integration in Snowflake includes:B. Store a generated identity and access management (IAM) entity for an external cloud provider - This helps in managing authentication and authorization with external cloud storage without embedding credentials in Snowflake. It supports various cloud providers like AWS, Azure, or GCP, ensuring that the identity management is streamlined across platforms.C. Support multiple external stages using one single Snowflake object - Storage integrations allow you to set up access configurations that can be reused across multiple external stages, simplifying the management of external data integrations.D. Avoid supplying credentials when creating a stage or when loading or unloading data - By using a storage integration, Snowflake can interact with external storage without the need to continuously manage or expose sensitive credentials, enhancing security and ease of operations.References: Snowflake documentation on storage integrations, found within the SnowPro Advanced: Architect course materials.

The following table exists in the production database:

A regulatory requirement states that the company must mask the username for events that are older than six months based on the current date when the data is queried.

How can the requirement be met without duplicating the event data and making sure it is applied when creating views using the table or cloning the table?

Use a masking policy on the username column using a entitlement table with valid dates.

Use a row level policy on the user_events table using a entitlement table with valid dates.

Use a masking policy on the username column with event_timestamp as a conditional column.

Use a secure view on the user_events table using a case statement on the username column.

A masking policy is a feature of Snowflake that allows masking sensitive data in query results based on the role of the user and the condition of the data. A masking policy can be applied to a column in a table or a view, and it can use another column in the same table or view as a conditional column. A conditional column is a column that determines whether the masking policy is applied or not based on its value1.

In this case, the requirement can be met by using a masking policy on the username column with event_timestamp as a conditional column. The masking policy can use a function that masks the username if the event_timestamp is older than six months based on the current date, and returns the original username otherwise. The masking policy can be applied to the user_events table, and it will also be applied when creating views using the table or cloning the table2.

The other options are not correct because:

References:

A company is trying to Ingest 10 TB of CSV data into a Snowflake table using Snowpipe as part of Its migration from a legacy database platform. The records need to be ingested in the MOST performant and cost-effective way.

How can these requirements be met?

Use ON_ERROR = continue in the copy into command.

Use purge = TRUE in the copy into command.

Use FURGE = FALSE in the copy into command.

Use on error = SKIP_FILE in the copy into command.

For ingesting a large volume of CSV data into Snowflake using Snowpipe, especially for a substantial amount like 10 TB, the on error = SKIP_FILE option in the COPY INTO command can be highly effective. This approach allows Snowpipe to skip over files that cause errors during the ingestion process, thereby not halting or significantly slowing down the overall data load. It helps in maintaining performance and cost-effectiveness by avoiding the reprocessing of problematic files and continuing with the ingestion of other data.

A company needs to share its product catalog data with one of its partners. The product catalog data is stored in two database tables: product_category, and product_details. Both tables can be joined by the product_id column. Data access should be governed, and only the partner should have access to the records.

The partner is not a Snowflake customer. The partner uses Amazon S3 for cloud storage.

Which design will be the MOST cost-effective and secure, while using the required Snowflake features?

Use Secure Data Sharing with an S3 bucket as a destination.

Publish product_category and product_details data sets on the Snowflake Marketplace.

Create a database user for the partner and give them access to the required data sets.

Create a reader account for the partner and share the data sets as secure views.

A reader account is a type of Snowflake account that allows external users to access data shared by a provider account without being a Snowflake customer. A reader account can be created and managed by the provider account, and can use the Snowflake web interface or JDBC/ODBC drivers to query the shared data. A reader account is billed to the provider account based on the credits consumed by the queries1. A secure view is a type of view that applies row-level security filters to the underlying tables, and masks the data that is not accessible to the user. A secure view can be shared with a reader account to provide granular and governed access to the data2. In this scenario, creating a reader account for the partner and sharing the data sets as secure views would be the most cost-effective and secure design, while using the required Snowflake features, because:

References:

What is a valid object hierarchy when building a Snowflake environment?

Account --> Database --> Schema --> Warehouse

Organization --> Account --> Database --> Schema --> Stage

Account --> Schema > Table --> Stage

Organization --> Account --> Stage --> Table --> View

This is the valid object hierarchy when building a Snowflake environment, according to the Snowflake documentation and the web search results. Snowflake is a cloud data platform that supports various types of objects, such as databases, schemas, tables, views, stages, warehouses, and more. These objects are organized in a hierarchical structure, as follows:

The other options listed are not valid object hierarchies, because they either omit or misplace some objects in the structure. For example, option A omits the organization level and places the warehouse under the schema level, which is incorrect. Option C omits the organization, account, and stage levels, and places the table under the schema level, which is incorrect. Option D omits the database level and places the stage and table under the account level, which is incorrect.

References:

A group of Data Analysts have been granted the role analyst role. They need a Snowflake database where they can create and modify tables, views, and other objects to load with their own data. The Analysts should not have the ability to give other Snowflake users outside of their role access to this data.

How should these requirements be met?

Grant ANALYST_R0LE OWNERSHIP on the database, but make sure that ANALYST_ROLE does not have the MANAGE GRANTS privilege on the account.

Grant SYSADMIN ownership of the database, but grant the create schema privilege on the database to the ANALYST_ROLE.

Make every schema in the database a managed access schema, owned by SYSADMIN, and grant create privileges on each schema to the ANALYST_ROLE for each type of object that needs to be created.

Grant ANALYST_ROLE ownership on the database, but grant the ownership on future [object type] s in database privilege to SYSADMIN.

The requirements state that the data analysts need to be able to create and modify database objects and load data, but should not be able to manage access for users outside of their role.

Option C: By making each schema within the database a managed access schema and having them owned by SYSADMIN, the ability to grant privileges on the schema's objects is strictly controlled. Managed access schemas limit the granting of privileges to the role specified as the owner of the schema, in this case, SYSADMIN. The ANALYST_ROLE can be granted the privileges necessary to create and modify objects within these schemas, satisfying the requirement for the analysts to perform their tasks without being able to extend access beyond their role.

An Architect needs to design a solution for building environments for development, test, and pre-production, all located in a single Snowflake account. The environments should be based on production data.

Which solution would be MOST cost-effective and performant?

Use zero-copy cloning into transient tables.

Use zero-copy cloning into permanent tables.

Use CREATE TABLE ... AS SELECT (CTAS) statements.

Use a Snowflake task to trigger a stored procedure to copy data.

Zero-copy cloning is a feature in Snowflake that allows for the creation of a clone of a database, schema, or table without duplicating any data, which is cost-effective as it saves on storage costs. Transient tables are temporary and do not incur storage costs for the time they are not accessed, making them a cost-effective option for development, test, and pre-production environments that do not require the durability of permanent tables123.

References

•Snowflake Documentation on Zero-Copy Cloning3.

•Articles discussing the cost-effectiveness and performance benefits of zero-copy cloning12.

A company has several sites in different regions from which the company wants to ingest data.

Which of the following will enable this type of data ingestion?

The company must have a Snowflake account in each cloud region to be able to ingest data to that account.

The company must replicate data between Snowflake accounts.

The company should provision a reader account to each site and ingest the data through the reader accounts.

The company should use a storage integration for the external stage.

This is the correct answer because it allows the company to ingest data from different regions using a storage integration for the external stage. A storage integration is a feature that enables secure and easy access to files in external cloud storage from Snowflake. A storage integration can be used to create an external stage, which is a named location that references the files in the external storage. An external stage can be used to load data into Snowflake tables using the COPY INTO command, or to unload data from Snowflake tables using the COPY INTO LOCATION command. A storage integration can support multiple regions and cloud platforms, as long as the external storage service is compatible with Snowflake12.

References:

A company has a Snowflake environment running in AWS us-west-2 (Oregon). The company needs to share data privately with a customer who is running their Snowflake environment in Azure East US 2 (Virginia).

What is the recommended sequence of operations that must be followed to meet this requirement?

1. Create a share and add the database privileges to the share

2. Create a new listing on the Snowflake Marketplace

3. Alter the listing and add the share

4. Instruct the customer to subscribe to the listing on the Snowflake Marketplace

1. Ask the customer to create a new Snowflake account in Azure EAST US 2 (Virginia)

2. Create a share and add the database privileges to the share

3. Alter the share and add the customer's Snowflake account to the share

1. Create a new Snowflake account in Azure East US 2 (Virginia)

2. Set up replication between AWS us-west-2 (Oregon) and Azure East US 2 (Virginia) for the database objects to be shared

3. Create a share and add the database privileges to the share

4. Alter the share and add the customer's Snowflake account to the share

1. Create a reader account in Azure East US 2 (Virginia)

2. Create a share and add the database privileges to the share

3. Add the reader account to the share

4. Share the reader account's URL and credentials with the customer

Option C is the correct answer because it allows the company to share data privately with the customer across different cloud platforms and regions. The company can create a new Snowflake account in Azure East US 2 (Virginia) and set up replication between AWS us-west-2 (Oregon) and Azure East US 2 (Virginia) for the database objects to be shared. This way, the company can ensure that the data is always up to date and consistent in both accounts. The company can then create a share and add the database privileges to the share, and alter the share and add the customer’s Snowflake account to the share. The customer can then access the shared data from their own Snowflake account in Azure East US 2 (Virginia).

Option A is incorrect because the Snowflake Marketplace is not a private way of sharing data. The Snowflake Marketplace is a public data exchange platform that allows anyone to browse and subscribe to data sets from various providers. The company would not be able to control who can access their data if they use the Snowflake Marketplace.

Option B is incorrect because it requires the customer to create a new Snowflake account in Azure East US 2 (Virginia), which may not be feasible or desirable for the customer. The customer may already have an existing Snowflake account in a different cloud platform or region, and may not want to incur additional costs or complexity by creating a new account.

Option D is incorrect because it involves creating a reader account in Azure East US 2 (Virginia), which is a limited and temporary way of sharing data. A reader account is a special type of Snowflake account that can only access data from a single share, and has a fixed duration of 30 days. The company would have to manage the reader account’s URL and credentials, and renew the account every 30 days. The customer would not be able to use their own Snowflake account to access the shared data, and would have to rely on the company’s reader account.

References:

What is a characteristic of loading data into Snowflake using the Snowflake Connector for Kafka?

The Connector only works in Snowflake regions that use AWS infrastructure.

The Connector works with all file formats, including text, JSON, Avro, Ore, Parquet, and XML.

The Connector creates and manages its own stage, file format, and pipe objects.

Loads using the Connector will have lower latency than Snowpipe and will ingest data in real time.

According to the SnowPro Advanced: Architect documents and learning resources, a characteristic of loading data into Snowflake using the Snowflake Connector for Kafka is that the Connector creates and manages its own stage, file format, and pipe objects. The stage is an internal stage that is used to store the data files from the Kafka topics. The file format is a JSON or Avro file format that is used to parse the data files. The pipe is a Snowpipe object that is used to load the data files into the Snowflake table. The Connector automatically creates and configures these objects based on the Kafka configuration properties, and handles the cleanup and maintenance of these objects1.

The other options are incorrect because they are not characteristics of loading data into Snowflake using the Snowflake Connector for Kafka. Option A is incorrect because the Connector works in Snowflake regions that use any cloud infrastructure, not just AWS. The Connector supports AWS, Azure, and Google Cloud platforms, and can load data across different regions and cloud platforms using data replication2. Option B is incorrect because the Connector does not work with all file formats, only JSON and Avro. The Connector expects the data in the Kafka topics to be in JSON or Avro format, and parses the data accordingly. Other file formats, such as text, ORC, Parquet, or XML, are not supported by the Connector3. Option D is incorrect because loads using the Connector do not have lower latency than Snowpipe, and do not ingest data in real time. The Connector uses Snowpipe to load data into Snowflake, and inherits the same latency and performance characteristics of Snowpipe. The Connector does not provide real-time ingestion, but near real-time ingestion, depending on the frequency and size of the data files4. References: Installing and Configuring the Kafka Connector | Snowflake Documentation, Sharing Data Across Regions and Cloud Platforms | Snowflake Documentation, Overview of the Kafka Connector | Snowflake Documentation, Using Snowflake Connector for Kafka With Snowpipe Streaming | Snowflake Documentation

At which object type level can the APPLY MASKING POLICY, APPLY ROW ACCESS POLICY and APPLY SESSION POLICY privileges be granted?

Global

Database

Schema

Table

The object type level at which the APPLY MASKING POLICY, APPLY ROW ACCESS POLICY and APPLY SESSION POLICY privileges can be granted is global. These are account-level privileges that control who can apply or unset these policies on objects such as columns, tables, views, accounts, or users. These privileges are granted to the ACCOUNTADMIN role by default, and can be granted to other roles as needed. The other options are incorrect because they are not the object type level at which these privileges can be granted. Database, schema, and table are lower-level object types that do not support these privileges. References: Access Control Privileges | Snowflake Documentation, Using Dynamic Data Masking | Snowflake Documentation, Using Row Access Policies | Snowflake Documentation, Using Session Policies | Snowflake Documentation

An Architect is troubleshooting a query with poor performance using the QUERY function. The Architect observes that the COMPILATION_TIME Is greater than the EXECUTION_TIME.

What is the reason for this?

The query is processing a very large dataset.

The query has overly complex logic.

The query Is queued for execution.

The query Is reading from remote storage

A company is using Snowflake in Azure in the Netherlands. The company analyst team also has data in JSON format that is stored in an Amazon S3 bucket in the AWS Singapore region that the team wants to analyze.

The Architect has been given the following requirements:

1. Provide access to frequently changing data

2. Keep egress costs to a minimum

3. Maintain low latency

How can these requirements be met with the LEAST amount of operational overhead?

Use a materialized view on top of an external table against the S3 bucket in AWS Singapore.

Use an external table against the S3 bucket in AWS Singapore and copy the data into transient tables.

Copy the data between providers from S3 to Azure Blob storage to collocate, then use Snowpipe for data ingestion.

Use AWS Transfer Family to replicate data between the S3 bucket in AWS Singapore and an Azure Netherlands Blob storage, then use an external table against the Blob storage.

Option A is the best design to meet the requirements because it uses a materialized view on top of an external table against the S3 bucket in AWS Singapore. A materialized view is a database object that contains the results of a query and can be refreshed periodically to reflect changes in the underlying data1. An external table is a table that references data files stored in a cloud storage service, such as Amazon S32. By using a materialized view on top of an external table, the company can provide access to frequently changing data, keep egress costs to a minimum, and maintain low latency. This is because the materialized view will cache the query results in Snowflake, reducing the need to access the external data files and incur network charges. The materialized view will also improve the query performance by avoiding scanning the external data files every time. The materialized view can be refreshed on a schedule or on demand to capture the changes in the external data files1.

Option B is not the best design because it uses an external table against the S3 bucket in AWS Singapore and copies the data into transient tables. A transient table is a table that is not subject to the Time Travel and Fail-safe features of Snowflake, and is automatically purged after a period of time3. By using an external table and copying the data into transient tables, the company will incur more egress costs and operational overhead than using a materialized view. This is because the external table will access the external data files every time a query is executed, and the copy operation will also transfer data from S3 to Snowflake. The transient tables will also consume more storage space in Snowflake and require manual maintenance to ensure they are up to date.

Option C is not the best design because it copies the data between providers from S3 to Azure Blob storage to collocate, then uses Snowpipe for data ingestion. Snowpipe is a service that automates the loading of data from external sources into Snowflake tables4. By copying the data between providers, the company will incur high egress costs and latency, as well as operational complexity and maintenance of the infrastructure. Snowpipe will also add another layer of processing and storage in Snowflake, which may not be necessary if the external data files are already in a queryable format.

Option D is not the best design because it uses AWS Transfer Family to replicate data between the S3 bucket in AWS Singapore and an Azure Netherlands Blob storage, then uses an external table against the Blob storage. AWS Transfer Family is a service that enables secure and seamless transfer of files over SFTP, FTPS, and FTP to and from Amazon S3 or Amazon EFS5. By using AWS Transfer Family, the company will incur high egress costs and latency, as well as operational complexity and maintenance of the infrastructure. The external table will also access the external data files every time a query is executed, which may affect the query performance.

References: 1: Materialized Views 2: External Tables 3: Transient Tables 4: Snowpipe Overview 5: AWS Transfer Family

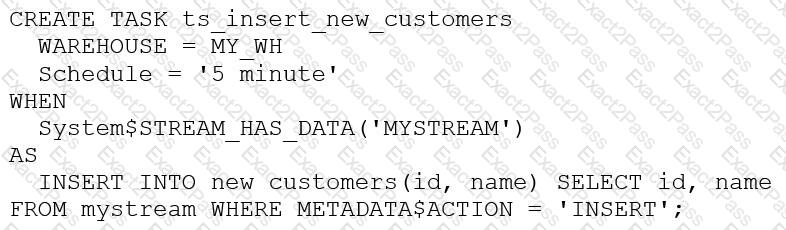

The following DDL command was used to create a task based on a stream:

Assuming MY_WH is set to auto_suspend – 60 and used exclusively for this task, which statement is true?

The warehouse MY_WH will be made active every five minutes to check the stream.

The warehouse MY_WH will only be active when there are results in the stream.

The warehouse MY_WH will never suspend.

The warehouse MY_WH will automatically resize to accommodate the size of the stream.

The warehouse MY_WH will only be active when there are results in the stream. This is because the task is created based on a stream, which means that the task will only be executed when there are new data in the stream. Additionally, the warehouse is set to auto_suspend - 60, which means that the warehouse will automatically suspend after 60 seconds of inactivity. Therefore, the warehouse will only be active when there are results in the stream. References:

Which Snowflake objects can be used in a data share? (Select TWO).

Standard view

Secure view

Stored procedure

External table

Stream

https://docs.snowflake.com/en/user-guide/data-sharing-intro

The data share exists between a data provider account and a data consumer account. Five tables from the provider account are being shared with the consumer account. The consumer role has been granted the imported privileges privilege.

What will happen to the consumer account if a new table (table_6) is added to the provider schema?

The consumer role will automatically see the new table and no additional grants are needed.

The consumer role will see the table only after this grant is given on the consumer side:

grant imported privileges on database PSHARE_EDW_4TEST_DB to DEV_ROLE;

The consumer role will see the table only after this grant is given on the provider side:

use role accountadmin;

Grant select on table EDW.ACCOUNTING.Table_6 to share PSHARE_EDW_4TEST;

The consumer role will see the table only after this grant is given on the provider side:

use role accountadmin;

grant usage on database EDW to share PSHARE_EDW_4TEST ;

grant usage on schema EDW.ACCOUNTING to share PSHARE_EDW_4TEST ;

Grant select on table EDW.ACCOUNTING.Table_6 to database PSHARE_EDW_4TEST_DB ;

When a new table (table_6) is added to a schema in the provider's account that is part of a data share, the consumer will not automatically see the new table. The consumer will only be able to access the new table once the appropriate privileges are granted by the provider. The correct process, as outlined in option D, involves using the provider’s ACCOUNTADMIN role to grant USAGE privileges on the database and schema, followed by SELECT privileges on the new table, specifically to the share that includes the consumer's database. This ensures that the consumer account can access the new table under the established data sharing setup.References:

The IT Security team has identified that there is an ongoing credential stuffing attack on many of their organization’s system.

What is the BEST way to find recent and ongoing login attempts to Snowflake?

Call the LOGIN_HISTORY Information Schema table function.

Query the LOGIN_HISTORY view in the ACCOUNT_USAGE schema in the SNOWFLAKE database.

View the History tab in the Snowflake UI and set up a filter for SQL text that contains the text "LOGIN".

View the Users section in the Account tab in the Snowflake UI and review the last login column.

This view can be used to query login attempts by Snowflake users within the last 365 days (1 year). It provides information such as the event timestamp, the user name, the client IP, the authentication method, the success or failure status, and the error code or message if the login attempt was unsuccessful. By querying this view, the IT Security team can identify any suspicious or malicious login attempts to Snowflake and take appropriate actions to prevent credential stuffing attacks1. The other options are not the best ways to find recent and ongoing login attempts to Snowflake. Option A is incorrect because the LOGIN_HISTORY Information Schema table function only returns login events within the last 7 days, which may not be sufficient to detect credential stuffing attacks that span a longer period of time2. Option C is incorrect because the History tab in the Snowflake UI only shows the queries executed by the current user or role, not the login events of other users or roles3. Option D is incorrect because the Users section in the Account tab in the Snowflake UI only shows the last login time for each user, not the details of the login attempts or the failures.

Assuming all Snowflake accounts are using an Enterprise edition or higher, in which development and testing scenarios would be copying of data be required, and zero-copy cloning not be suitable? (Select TWO).

Developers create their own datasets to work against transformed versions of the live data.

Production and development run in different databases in the same account, and Developers need to see production-like data but with specific columns masked.

Data is in a production Snowflake account that needs to be provided to Developers in a separate development/testing Snowflake account in the same cloud region.

Developers create their own copies of a standard test database previously created for them in the development account, for their initial development and unit testing.

The release process requires pre-production testing of changes with data of production scale and complexity. For security reasons, pre-production also runs in the production account.

Zero-copy cloning is a feature that allows creating a clone of a table, schema, or database without physically copying the data. Zero-copy cloning is suitable for scenarios where the cloned object needs to have the same data and metadata as the original object, and where the cloned object does not need to be modified or updated frequently. Zero-copy cloning is also suitable for scenarios where the cloned object needs to be shared within the same Snowflake account or across different accounts in the same cloud region2

However, zero-copy cloning is not suitable for scenarios where the cloned object needs to have different data or metadata than the original object, or where the cloned object needs to be modified or updated frequently. Zero-copy cloning is also not suitable for scenarios where the cloned object needs to be shared across different accounts in different cloud regions. In these scenarios, copying of data would be required, either by using the COPY INTO command or by using data sharing with secure views3

The following are examples of development and testing scenarios where copying of data would be required, and zero-copy cloning would not be suitable:

The following are examples of development and testing scenarios where zero-copy cloning would be suitable, and copying of data would not be required:

Files arrive in an external stage every 10 seconds from a proprietary system. The files range in size from 500 K to 3 MB. The data must be accessible by dashboards as soon as it arrives.

How can a Snowflake Architect meet this requirement with the LEAST amount of coding? (Choose two.)

Use Snowpipe with auto-ingest.

Use a COPY command with a task.

Use a materialized view on an external table.

Use the COPY INTO command.

Use a combination of a task and a stream.

The requirement is for the data to be accessible as quickly as possible after it arrives in the external stage with minimal coding effort.

Option A: Snowpipe with auto-ingest is a service that continuously loads data as it arrives in the stage. With auto-ingest, Snowpipe automatically detects new files as they arrive in a cloud stage and loads the data into the specified Snowflake table with minimal delay and no intervention required. This is an ideal low-maintenance solution for the given scenario where files are arriving at a very high frequency.

Option E: Using a combination of a task and a stream allows for real-time change data capture in Snowflake. A stream records changes (inserts, updates, and deletes) made to a table, and a task can be scheduled to trigger on a very short interval, ensuring that changes are processed into the dashboard tables as they occur.

Which feature provides the capability to define an alternate cluster key for a table with an existing cluster key?

External table

Materialized view

Search optimization

Result cache

A materialized view is a feature that provides the capability to define an alternate cluster key for a table with an existing cluster key. A materialized view is a pre-computed result set that is stored in Snowflake and can be queried like a regular table. A materialized view can have a different cluster key than the base table, which can improve the performance and efficiency of queries on the materialized view. A materialized view can also support aggregations, joins, and filters on the base table data. A materialized view is automatically refreshed when the underlying data in the base table changes, as long as the AUTO_REFRESH parameter is set to true1.

References:

Which command will create a schema without Fail-safe and will restrict object owners from passing on access to other users?

create schema EDW.ACCOUNTING WITH MANAGED ACCESS;

create schema EDW.ACCOUNTING WITH MANAGED ACCESS DATA_RETENTION_TIME_IN_DAYS - 7;

create TRANSIENT schema EDW.ACCOUNTING WITH MANAGED ACCESS DATA_RETENTION_TIME_IN_DAYS = 1;

create TRANSIENT schema EDW.ACCOUNTING WITH MANAGED ACCESS DATA_RETENTION_TIME_IN_DAYS = 7;

A transient schema in Snowflake is designed without a Fail-safe period, meaning it does not incur additional storage costs once it leaves Time Travel, and it is not protected by Fail-safe in the event of a data loss. The WITH MANAGED ACCESS option ensures that all privilege grants, including future grants on objects within the schema, are managed by the schema owner, thus restricting object owners from passing on access to other users1.

References =

•Snowflake Documentation on creating schemas1

•Snowflake Documentation on configuring access control2

•Snowflake Documentation on understanding and viewing Fail-safe3

What built-in Snowflake features make use of the change tracking metadata for a table? (Choose two.)

The MERGE command

The UPSERT command

The CHANGES clause

A STREAM object

The CHANGE_DATA_CAPTURE command

In Snowflake, the change tracking metadata for a table is utilized by the MERGE command and the STREAM object. The MERGE command uses change tracking to determine how to apply updates and inserts efficiently based on differences between source and target tables. STREAM objects, on the other hand, specifically capture and store change data, enabling incremental processing based on changes made to a table since the last stream offset was committed.References: Snowflake Documentation on MERGE and STREAM Objects.

A user has the appropriate privilege to see unmasked data in a column.

If the user loads this column data into another column that does not have a masking policy, what will occur?

Unmasked data will be loaded in the new column.

Masked data will be loaded into the new column.

Unmasked data will be loaded into the new column but only users with the appropriate privileges will be able to see the unmasked data.

Unmasked data will be loaded into the new column and no users will be able to see the unmasked data.

According to the SnowPro Advanced: Architect documents and learning resources, column masking policies are applied at query time based on the privileges of the user who runs the query. Therefore, if a user has the privilege to see unmasked data in a column, they will see the original data when they query that column. If they load this column data into another column that does not have a masking policy, the unmasked data will be loaded in the new column, and any user who can query the new column will see the unmasked data as well. The masking policy does not affect the underlying data in the column, only the query results.

References:

How do Snowflake databases that are created from shares differ from standard databases that are not created from shares? (Choose three.)

Shared databases are read-only.

Shared databases must be refreshed in order for new data to be visible.

Shared databases cannot be cloned.

Shared databases are not supported by Time Travel.

Shared databases will have the PUBLIC or INFORMATION_SCHEMA schemas without explicitly granting these schemas to the share.

Shared databases can also be created as transient databases.

According to the SnowPro Advanced: Architect documents and learning resources, the ways that Snowflake databases that are created from shares differ from standard databases that are not created from shares are:

The other options are incorrect because they are not ways that Snowflake databases that are created from shares differ from standard databases that are not created from shares. Option B is incorrect because shared databases do not need to be refreshed in order for new data to be visible. The data consumers who access the shared databases can see the latest data as soon as the data providers update the data1. Option E is incorrect because shared databases will not have the PUBLIC or INFORMATION_SCHEMA schemas without explicitly granting these schemas to the share. The data consumers who access the shared databases can only see the objects that the data providers grant to the share, and the PUBLIC and INFORMATION_SCHEMA schemas are not granted by default4. Option F is incorrect because shared databases cannot be created as transient databases. Transient databases are databases that do not support Time Travel or Fail-safe, and can be dropped without affecting the retention period of the data. Shared databases are always created as permanent databases, regardless of the type of the source database5. References: Introduction to Secure Data Sharing | Snowflake Documentation, Cloning Objects | Snowflake Documentation, Time Travel | Snowflake Documentation, Working with Shares | Snowflake Documentation, CREATE DATABASE | Snowflake Documentation

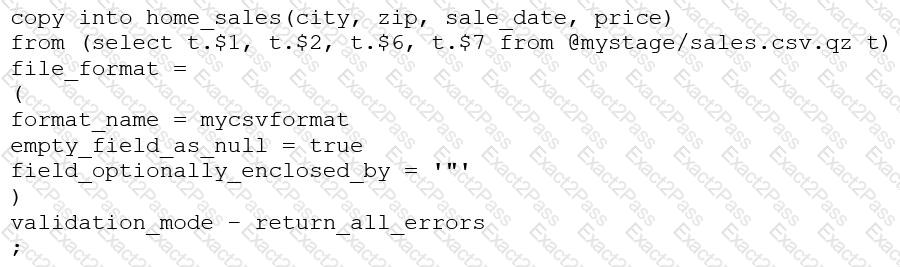

Consider the following COPY command which is loading data with CSV format into a Snowflake table from an internal stage through a data transformation query.

This command results in the following error:

SQL compilation error: invalid parameter 'validation_mode'

Assuming the syntax is correct, what is the cause of this error?

The VALIDATION_MODE parameter supports COPY statements that load data from external stages only.

The VALIDATION_MODE parameter does not support COPY statements with CSV file formats.

The VALIDATION_MODE parameter does not support COPY statements that transform data during a load.

The value return_all_errors of the option VALIDATION_MODE is causing a compilation error.

References: : COPY INTO