Last Update 8 hours ago Total Questions : 51

The Adobe Workfront Fusion Professional content is now fully updated, with all current exam questions added 8 hours ago. Deciding to include AD0-E902 practice exam questions in your study plan goes far beyond basic test preparation.

You'll find that our AD0-E902 exam questions frequently feature detailed scenarios and practical problem-solving exercises that directly mirror industry challenges. Engaging with these AD0-E902 sample sets allows you to effectively manage your time and pace yourself, giving you the ability to finish any Adobe Workfront Fusion Professional practice test comfortably within the allotted time.

Which statement about the differences between instant and polling triggers is true?

Which two actions are best practices for making a Fusion scenario easier to read, share and understand? (Choose two.)

A Fusion user needs to connect Workfront with a third-party system that does not have a dedicated app connector in Fusion.

What should the user do to build this integration?

A Fusion user must archive the last five versions of a scenario for one year.

What should the user do?

A user needs to dynamically create custom form field options in two customer environments.

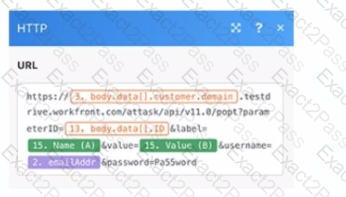

Given this image, which type of Workfront module is referenced in the formula with the parameterlD value?

Given the array below, a user wants a comma-separated string of all stat names.

What is the correct expression?

A scenario is too large, with too many modules. Which technique can reduce the number of modules?